My Role

Design, Development, Data Analytics, Machine Learning

Team Members

Developer

Timeline

6 weeks | 2025

Industry

Remote Sensing

Ninety-two percent of global shipping traffic overlaps with blue, fin, and humpback whale ranges, yet strike data is sparse. From the surface we can’t see the ecosystem below - but we can hear it.

Passive acoustic monitoring reveals ecological health and could provide an early warning system for dangerous whale-ship encounters, however these enormous troves of raw data are difficult to parse through or gain insight from.

From ~80,000 Hours of Raw Recordings

How might we better understand the marine ecosystem and visualize the impact of human activity on marine life?

We built an interactive experience and trained a custom machine-learning classifier to transforms raw audio and sparse detections into detailed, second-by-second identifications of marine and human sounds.

See the live experience

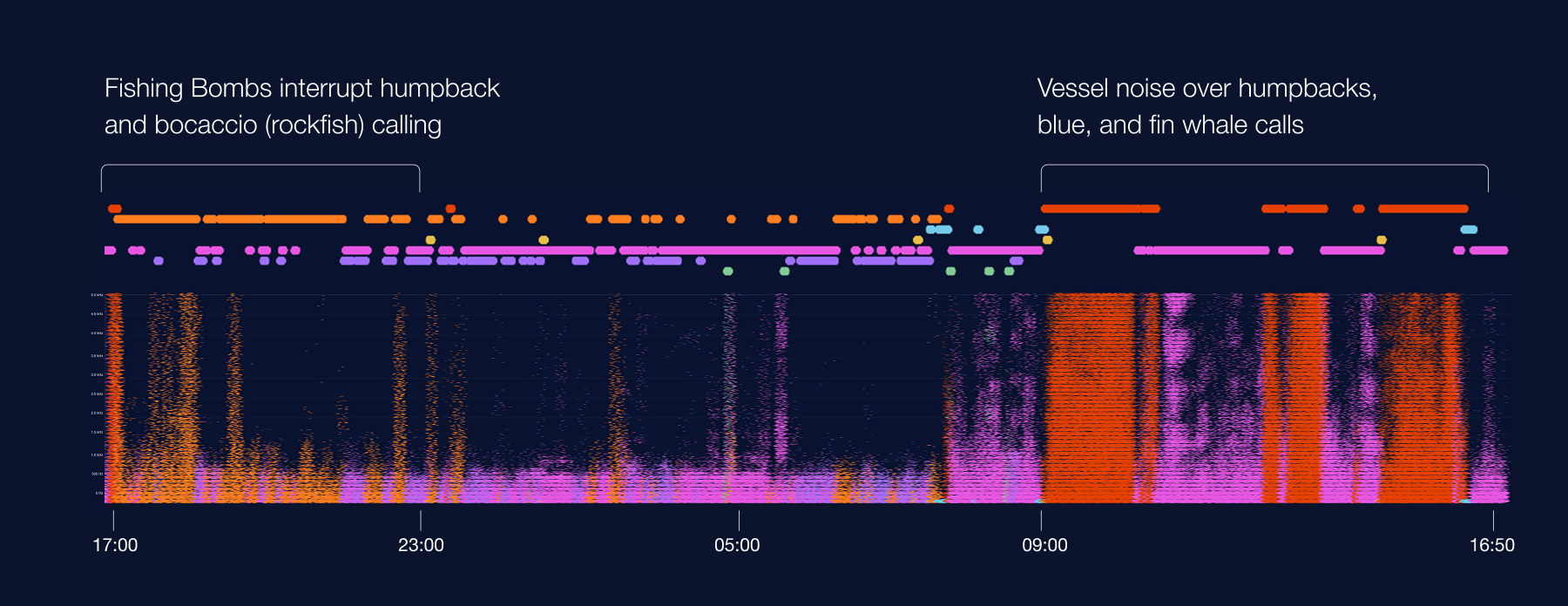

However, noise from ships and seal bomb explosions (used for fishing) overlap with these frequencies, interfering with their ability to navigate, find food, and communicate.

By monitoring the soundscape we can better understand the marine ecosystem and the effects of anthrophony on it.

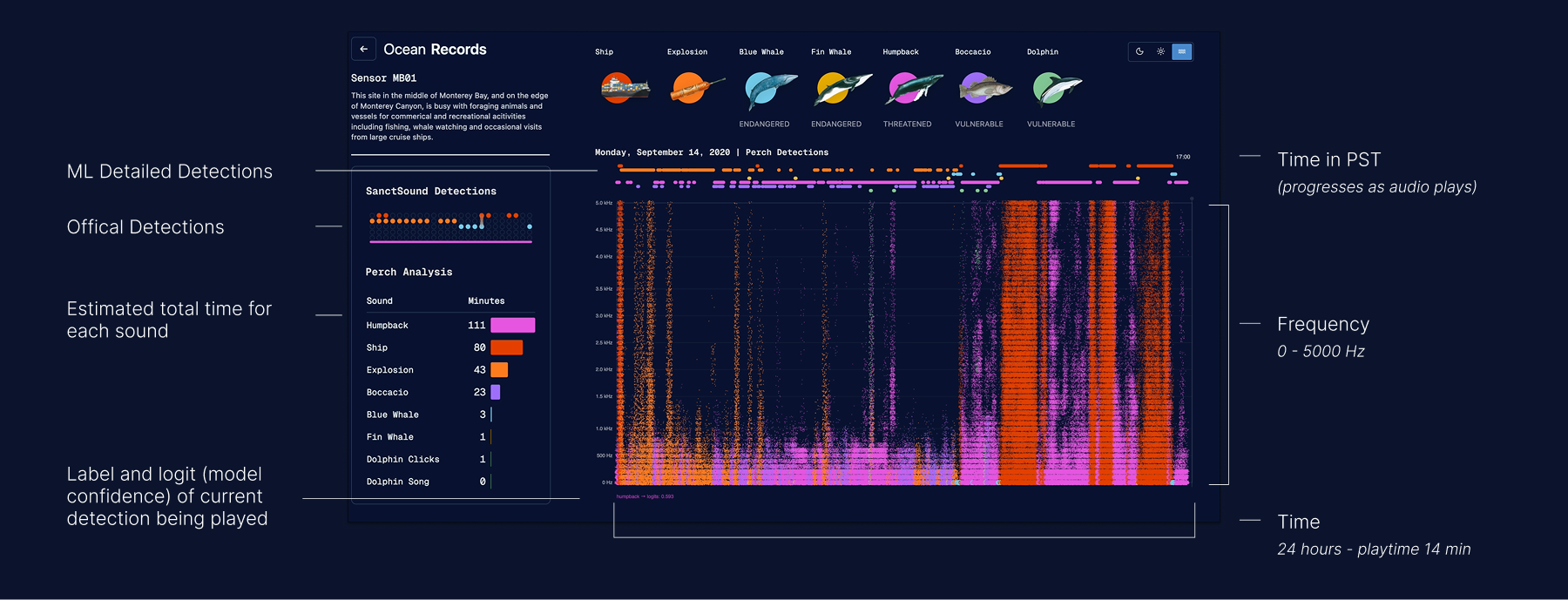

Toggle across sensors to see four years of seasonal patterns in marine and human sounds, while live shipping tracks for each year appear on the left.

Explore monthly trends and hour-level detections showing where human made sounds (anthrophony) and marine sounds (biophony) directly overlapped.

See and hear 24 hours underwater in just 14 minutes as a custom ML classifier turns raw audio into second-by-second soundscapes that reveal the beauty of the marine sounds and the overlap of human noise.

Globally, one of the most diverse marine mammal assemblages by species count and abundance.

From Raw audio to detections

Machine Learning Training Process

Perch was initially trained on birdsong but with few-shot training it outperforms whale classifiers on accurate classification of marine sounds.

Machine Learning Training Process

Data and Tools

Audio on for the best experience.

Showed how passive acoustic monitoring offers a multi-dimensional look into ocean ecosystem patterns.

Created an emotional understanding of anthropogenic noise pollution even within sanctuary areas.

Demonstrated how machine learning models like Perch can scale up detections and unlock the full power of Passive Acoustic monitoring

Next Steps

Working on Ocean Records made me fall in love with making big, complex data understandable and emotionally resonant. Turning 80,000 hours of underwater audio into clear, interactive insights pushed me to blend design craft with cutting-edge machine learning, training classifiers and shaping visual systems that reveal patterns people can’t see from the surface.

Through collaboration with ML scientists, iterative analysis, and careful storytelling, I saw how design and machine learning bring clarity to complex climate and ecological data.